Jan 2018

Q Learning Starcraft

To make an AI for a game you need: 1 — The AI logic, whether this is scripted behavior or artificial intelligence. 2 — To convert your game world into something your ai can understand and act upon (API-ifying your game). This post aims to show you the methods for building AL logic, from scripted behavior to methods that can learn almost any task. This should be considered an intro post for those with some programming skills to get into bot building. We will build an AI that can play a Starcraft II minigame. We’re going to use python, numpy, pysc2. Let’s go!

Setup pysc2

If you want to make a game agent, you need to create an interface/api for your game that an AI can use to see, play, and take actions in your game world. We are going to use Starcraft II, specifically an environment released by deepmind and google called pysc2. First we need to install Starcraft II (it’s free); I’m using linux so:

Make sure you get version 3.17 of the game as newer versions don’t seem to work with some of the pysc2 functions (see run_configs/platforms). It takes about 7GB of space once unzipped, and to unzip the password is ‘iagreetotheeula’. I had some trouble getting the 3D view to render on ubuntu.

If you use a mac make sure to keep the game installed in the default location (~) and create ‘Maps’, ‘Replays’ folders in the main folder. Use the installer. Now let’s insall pysc2 and pytorch:

Now we need to get the sc2 maps we will use as a playground for our agent:

Get the mini games maps at that link.

For some reason the mini game zip file on pysc2’s github didn’t work on linux. So I unzipped it on my mac and just moved it to my linux machine. Put the mini_games folder into the `Maps` folder in your StarcraftII Installation folder. The minigame maps actually come with pysc2 but who knows if deepmind will continue to do that. Ok now we have all the software and maps let’s program our first agent and examine the details of the pysc2 environment.

A Implementing a random agent

The AI we are going to make is going to be playing the move to beacon game. Our AI will control a marine (small combat unit) and move it around to get to the beacon.

I’m going to make a simple agent that can interact with this environment. It’s going to move around the map randomly:

Here is a video of the above code running; the agent moves randomy around the map:

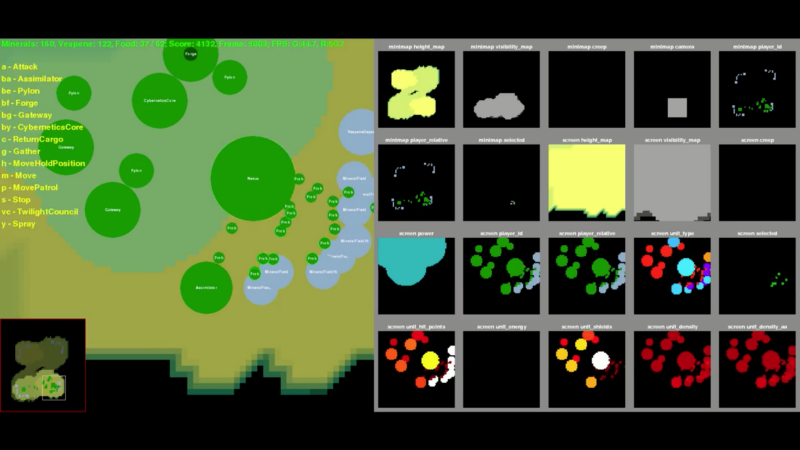

The feature maps look like this:

Nothing crazy happens here. You can see the main view with a marine (green) and the beacon (grey blue). The marine just moves around randomly like we told it to. The right part of the screen are all the different views our bot can see. The vary from unit types on the screen, to terrain height maps. To see more code/explanations for this section please see this notebook.

Another example of feature layers with more readable text:

Implementing a scripted AI

Now we want to do something a bit better than random. In the move to beacon minigame the goal is to move to the beacon. We’ll script a bot that does this:

Here is a video of this bot playing:

You can then view the replay in the Starcraft II game client:

As you can see the scripted AI plays the beacon game and moves the marine to the beacon. This scripted bot gets an average reward of 25 per episode after running for 105 episodes. This reward is a reflection of how good our bot is at reaching the becaon before the mingame timer is up (120 sec). Any AI we develop should be at least as good as this scripted bot, so a mean score of 25 once trained. Next we will implement an actual AI (one that learns how to play) using reinforcement learning.

Implementing a Q Learning AI

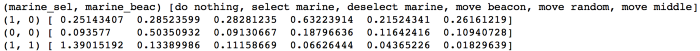

This method is a variant of something called ‘Q Learning’ that tries to learn a value called ‘quality’ for every state in the game world and attributes higher quality to states that can lead to more rewards. We create a table (called a Qtable) with all possible states of our game world on the y axis and all possible actions on the x axis. The quality values are stored in this table and tell us what action to take in any possible state. Here is an example of a Qtable:

So when it has the marine selected but its not at the beacon, state=(1, 0), our agent learns that moving to the beacon has the highest value (action at index 3) compared to other actions in the same state. When it doesnt have the marine selected and its not at the beacon, state=(0,0), our agent learns that select the marine has the highest value (action at index 1). When it is one the beacon and it has the marine selected, state=(1,1), doing nothing is valuable. When we update the Q Table in the update_qtable function we’re following this formula:

It basically says ‘take our estimate of the reward of taking an action and compare it against actually taking the action.’ Then take this difference and adjust our Q values to be a little less wrong. Our AI will take state information and spit out an action to take. I‘ve simplified this world state and the actions to make it easier to learn the Q table and keep the code concise. Instead of hardcoding the logic to always move to the beacon we give our agent a choice. We gave it 6 things it can do:

`_NO_OP` — do nothing

`_SELECT_ARMY` — select the marine

`__SELECT_POINT` — deselect the marine

`_MOVE_SCREEN` — move to the beacon

`_MOVERAND` — move to a random point that is not the beacon

`_MOVE_MIDDLE` — move to a point that is in the middle of the map

Here is our Q table code for a pretrained agent:

Run this agent with pretrained code by downloading the two files (agent3_qtable.npy, agent3_states.npy):

This AI can match the reward of 25 per episode our scripted AI can get once it is trained. It tries a lot of different movements around the map and noticed that in states where the marine and beacon positions are on top of each other it gets a reward. It then tries to take actions in every state that lead to this outcome to maximize reward. Here is a video of this AI playing early on:

Here is a video once it learns that moving to the beacon provides a reward:

Conclusion and Future Posts

In this post I wanted to show you three types of programmed AI behavior, random, scripted, and Q learning agent. As Martin Anward of Paradox development says: “Machine learning for complex games is mostly science fiction at this point in time.” I agree with Martin on several of his points. Still I think there’s potential for machine learning in games. The rest of the thread is how to make a good AI using a weighted lists; neural nets are the same thing as a weighted list — but learned. What’s difficult, and Martin is right here, is that the level of complex interactions is difficult for a computer to piece together and reason about.

Here is our Q learning beacon AI again, playing on normal speed this time:

If you enjoyed this post, be sure to signup for more.